Your AI Feature Is a Privacy Liability: The Case for Moving Inference to WebGPU

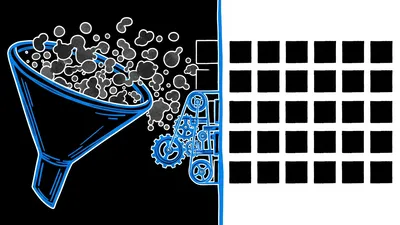

Stop funneling sensitive user data through expensive cloud APIs and start leveraging the untapped GPU power already sitting in your user's browser.

Most AI features today are just sophisticated data exfiltration pipelines disguised as "innovation." We’ve collectively decided that it’s perfectly normal to pipe every scrap of sensitive user data—be it private journals, medical notes, or proprietary code—through a cloud-based API just to perform a simple summarization task. It’s a privacy nightmare, and honestly, your CFO probably hates the bill you're racking up with OpenAI every month.

The reality is that we are leaving massive amounts of compute on the table. Every modern laptop and smartphone your users own has a GPU that is likely sitting idle while your cloud servers are screaming for mercy. With the arrival of WebGPU, we finally have a way to move inference out of the cloud and into the browser, where it belongs.

The "Cloud-First" Tax

When you send a user's data to a server for inference, you aren't just paying for the GPU cycles. You're paying for the transit, the storage (even if temporary), and the massive liability of holding that data. If your server gets compromised, or if your API provider changes their terms of service, that data is gone.

WebGPU changes the math. It’s not just a "WebGL but better" graphics API; it’s a generic compute API. It allows us to treat the user’s graphics card like a parallel processing powerhouse.

Why aren't we doing this already?

Until recently, running a Large Language Model (LLM) in a browser was a pipe dream. JavaScript is slow, and WebGL was never really designed for the heavy lifting required by matrix multiplications in modern transformers.

WebGPU is different. It provides low-level access to the GPU, allowing for far more efficient memory management and compute shaders. We’re seeing performance gains that make real-time, local inference not just possible, but actually *snappy*.

Let’s Look at the Code

You don't need to be a CUDA expert to get started. Libraries like Transformers.js have done the heavy lifting of porting popular models to the browser using ONNX Runtime and WebGPU.

Here is how you can run a sentiment analysis model entirely on the client side. No API keys, no server costs, and zero data leaves the machine.

import { pipeline } from '@xenova/transformers';

async function runInference(text) {

// We specify 'webgpu' as the device.

// If the user's browser doesn't support it, we can fallback to 'wasm'.

const classifier = await pipeline('sentiment-analysis', 'Xenova/distilbert-base-uncased-finetuned-sst-2-english', {

device: 'webgpu',

});

const output = await classifier(text);

console.log(output);

// [{ label: 'POSITIVE', score: 0.9998 }]

}

runInference("This feature is actually private and I love it.");The first time this runs, the browser downloads the model weights (usually cached in IndexedDB). After that, the inference happens locally. I’ve seen this run in under 30ms for small models. That’s faster than a round-trip request to an API in a different region.

The "Catch" (There's always one)

I’m not suggesting you move a 70B parameter Llama model into the browser today. Unless you want your user's Chrome tab to consume 40GB of RAM and crash their system, you have to be smart.

1. Model Size: You're asking users to download your model. A 100MB model is fine; a 2GB model is a hard sell. Use quantization (4-bit or 8-bit) to shrink your weights without losing too much "intelligence."

2. VRAM Limits: Browsers are stingy. They won't let you hog the entire GPU. You need to handle memory pressure gracefully.

3. Support: WebGPU is currently a "modern browser" thing. Chrome, Edge, and recently Safari have landed support, but you’ll still need a WASM fallback for the Firefox holdouts (though even Firefox is getting close).

Privacy as a Feature

Imagine building a document editor where the "AI Rewrite" button works offline. Or a medical app that summarizes patient notes without those notes ever touching a third-party server.

When you move inference to WebGPU, Privacy stops being a bullet point in your TOS that nobody reads and starts being a technical guarantee. You can't leak data you never had in the first place.

If you’re still building AI features by wrapping a fetch() call to a cloud provider, you’re building a liability. It’s time to start trusting the hardware your users already paid for. The browser is no longer just a place to render HTML; it’s a distributed compute network waiting to be used.