Streams Are Not Arrays

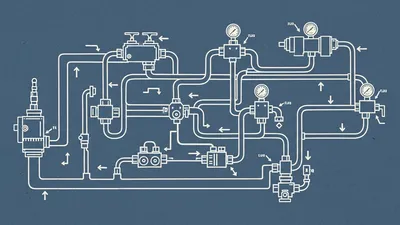

Your memory usage is spiking because you're treating streams like collections instead of conduits, and it's time to fix the plumbing.

You’ve probably watched your server’s memory usage climb like a heart rate monitor during a marathon, only to see the process keel over with a "Heap out of memory" error. It’s a frustrating rite of passage that usually happens because we’re treating data like a pile of bricks when we should be treating it like water in a pipe.

The "Bucket" Mental Model

Most of us learned to code by working with arrays. You have a list of items, you load them into memory, you map over them, you filter them, and you’re done. This is fine when you're dealing with 100 user records. It is a disaster when you're dealing with a 2GB CSV file or a high-traffic image processing service.

When you use fs.readFile(), you are telling Node: "Go get that entire file, bring the whole thing into RAM, and let me know when you're finished."

// This is the "Bucket" approach. It works... until it doesn't.

const fs = require('fs');

fs.readFile('massive-log-file.log', (err, data) => {

if (err) throw err;

// If this file is 4GB and your RAM limit is 2GB,

// your app is already dead before it reaches this line.

const lines = data.toString().split('\n');

console.log(`Processed ${lines.length} lines`);

});The problem here is that the data is being held hostage in memory. You aren't doing anything with the first line until the last line has been loaded. It’s inefficient, and quite frankly, it’s dangerous for your production environment.

Enter the Conduit

Streams are not collections. They are a sequence of data pieces (chunks) that arrive over time. Instead of waiting for the whole "bucket" to fill up, you handle the water as it flows through the "conduit."

The mental shift is simple: Don't store it, just pass it along.

Here is the same task using a stream. Notice how we use the pipeline API—it's much cleaner than the old .pipe() method because it handles error cleanup automatically.

const fs = require('fs');

const { pipeline } = require('stream/promises');

const split = require('split2'); // A handy package to break streams into lines

async function processLogs() {

try {

await pipeline(

fs.createReadStream('massive-log-file.log'),

split(),

async function* (source) {

let count = 0;

for await (const line of source) {

// We only ever have ONE line in memory at a time.

count++;

if (line.includes('ERROR')) {

// Do something with the error line

}

}

console.log(`Processed ${count} lines total.`);

}

);

console.log('Pipeline succeeded');

} catch (err) {

console.error('Pipeline failed', err);

}

}

processLogs();The Magic of Backpressure

One of the most common mistakes I see when people start using streams is ignoring backpressure.

Imagine a fast producer (a disk reading at 500MB/s) and a slow consumer (an API call that takes 100ms). If you just blindly shove data from the disk into the API, the data has to go *somewhere* while it waits for the API. That "somewhere" is your RAM (the internal buffer). If you don't respect backpressure, you’re just back to square one with a crashed process.

Node’s pipeline and pipe handle this for you. They tell the source: "Whoa, slow down, the next guy in line is busy. Stop reading for a second." This is the "plumbing" that makes streams so powerful.

Where People Get Tripped Up

The biggest "gotcha" is trying to access the length of a stream. You can't. It’s like asking how long a river is while you’re standing on the bank watching a leaf float by. You only know about the chunk of data currently in your hands.

Common pain points:

1. JSON parsing: You can't just JSON.parse() a stream. You need a streaming parser like JSONStream because a single chunk might contain half of a key-value pair.

2. String vs. Buffer: By default, streams deal in Buffer objects. If you expect strings, you need to call .setEncoding('utf8') or use a decoder.

3. Error Handling: If you use the old .pipe(), an error in the middle of the chain doesn't necessarily close the source or the destination. It’s a memory leak waiting to happen. Always use `stream/promises` or `pipeline`.

Why You Should Care

Writing code that uses streams is admittedly more complex than using map() on an array. It requires more boilerplate and a bit more thinking about data types.

But the reward is a system that uses a flat line of memory regardless of whether you’re processing 10MB or 10TB. It’s the difference between a server that crashes every Sunday during log rotation and one that hums along without a care in the world.

Stop building buckets. Start building pipes.