The Virtual DOM Is a Legacy Implementation Detail

Why the shift toward native Signals is finally ending the era of heavy diffing and re-renders in modern UI development.

We’ve spent the last decade convincing ourselves that recreating the entire UI tree in memory every time a person clicks a button is the pinnacle of engineering efficiency. It wasn't; it was just the best bandage we had for a browser API that lacked a native way to track state changes effectively.

The Virtual DOM (VDOM) was a revolutionary bridge, but the bridge is starting to look a lot like a bottleneck. As we move toward a future defined by Signals and fine-grained reactivity, it’s time to admit that the VDOM is no longer the star of the show—it’s a legacy implementation detail that we are finally learning to outgrow.

The Lie We Told About Performance

If you started web development anytime after 2013, you were likely told that "The DOM is slow, and the Virtual DOM is fast." This is a half-truth that has gained the status of dogma.

The DOM isn't inherently slow. What’s slow is the layout engine and the paint process that triggers when you make inefficient, sweeping changes to the DOM. The Virtual DOM didn’t magically make the browser faster; it just provided a way to batch updates and minimize the number of times we touched the "real" DOM.

But there is a massive hidden cost: Diffing.

In a VDOM-based library like React, when state changes, the framework runs your component functions again. It generates a new tree of JavaScript objects, compares it to the old tree, calculates the difference (the "patch"), and then applies those changes to the browser.

// Every time 'count' changes, this entire function re-runs.

// A new object is created for the div, the h1, and the button.

function Counter() {

const [count, setCount] = useState(0);

return (

<div>

<h1>{count}</h1>

<button onClick={() => setCount(count + 1)}>Increment</button>

</div>

);

}In a small app, this is negligible. In a massive dashboard with thousands of nodes, the overhead of re-executing functions and diffing large object trees becomes a performance tax. We’ve spent years inventing complex workarounds like memo, useMemo, and useCallback just to tell the framework: *"Please, for the love of God, don't do the work you were designed to do."*

The Shift to Fine-Grained Reactivity

The industry is moving toward a different primitive: Signals.

Unlike VDOM-based state, which requires a top-down re-render to find what changed, Signals are reactive atoms that know exactly who is using them. When a Signal’s value changes, it notifies only the specific parts of the UI that depend on it. There is no diffing. There is no "re-rendering the whole component." There is only a surgical update to a specific DOM node.

Look at how a Signal-based approach (using a SolidJS or Preact-like syntax) differs:

import { createSignal } from "signals-library";

function Counter() {

const [count, setCount] = createSignal(0);

// This function runs ONCE.

// The 'count()' call isn't just returning a value;

// it's creating a subscription.

return (

<div>

<h1>{count()}</h1>

<button onClick={() => setCount(count() + 1)}>Increment</button>

</div>

);

}In the example above, the Counter function executes exactly once during the initial mount. It sets up the DOM nodes and attaches a listener to the count signal. When you click the button, the framework doesn’t re-run the Counter function. It doesn't create a new VDOM tree. It simply updates the textContent of the <h1> tag directly.

The "component" as we know it disappears after the first run. It's just a setup script for the DOM.

The Problem with the "Dependency Array Tax"

One of the most frustrating aspects of the VDOM era has been the mental overhead of managing dependencies. If you've ever spent three hours debugging a useEffect loop or a stale closure in React, you've paid the dependency array tax.

// The VDOM way: manual dependency management

useEffect(() => {

console.log("Data changed:", data);

}, [data]); // If you forget this, good luck.The VDOM requires you to be the "manual diffing engine" for your logic. Because the framework doesn't know *why* a piece of code needs to run, you have to tell it.

Signals flip this. Because the framework tracks access automatically (via a simple subscriber pattern), dependencies are discovered at runtime. If you use a signal inside an effect, the effect subscribes to it. If you stop using it, it unsubscribes.

// The Signals way: automatic dependency tracking

effect(() => {

// The framework "sees" you accessing data()

// and creates the link automatically.

console.log("Data changed:", data());

});This isn't just "cleaner" code; it's a fundamental shift in how we think about the lifecycle of an application. We are moving from snapshot-based rendering (VDOM) to stream-based updates (Signals).

Under the Hood: How a Signal Actually Works

To understand why this is a "legacy" shift, we need to look at how simple a Signal actually is. It’s essentially the Observer pattern with a cleaner API.

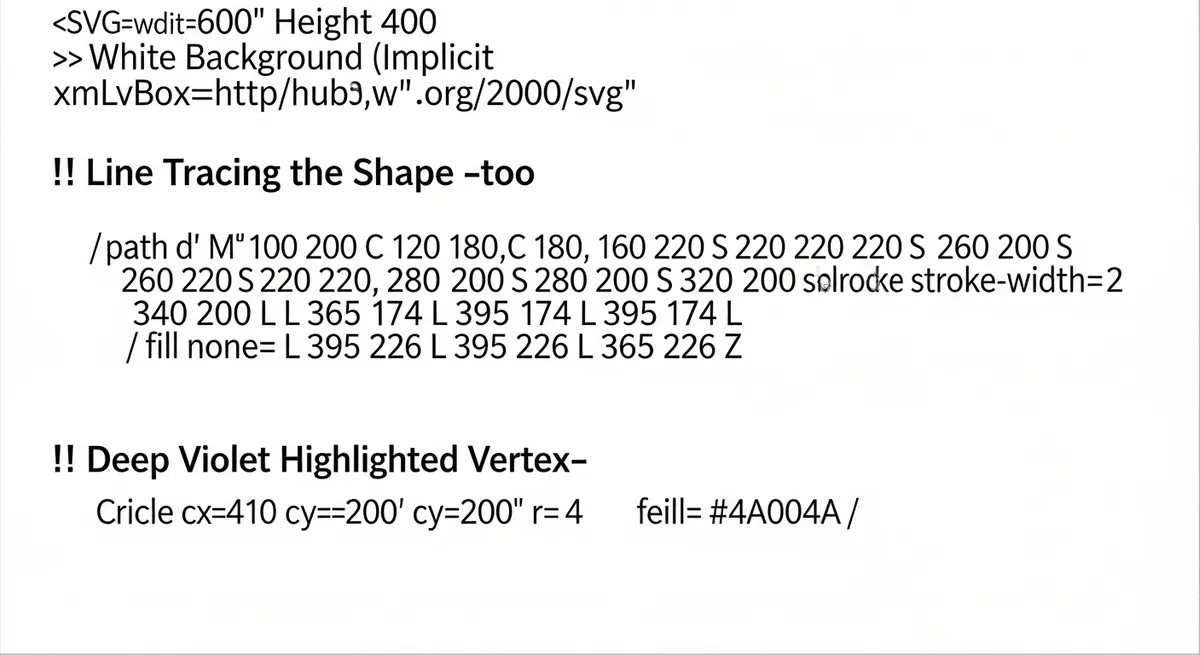

Here is a bare-bones implementation of a signal to illustrate the point:

let context = [];

function createSignal(value) {

const subscriptions = new Set();

const read = () => {

const running = context[context.length - 1];

if (running) subscriptions.add(running);

return value;

};

const write = (newValue) => {

value = newValue;

for (const sub of subscriptions) {

sub.execute();

}

};

return [read, write];

}

function createEffect(fn) {

const effect = {

execute() {

context.push(effect);

fn();

context.pop();

}

};

effect.execute();

}This tiny bit of code is the foundation of the "VDOM-less" revolution. When you call read(), it checks if an effect is currently running. If so, it adds that effect to a list. When you call write(), it runs everything in that list.

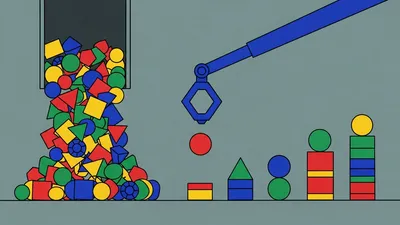

Compare the simplicity of this "push" model to the complexity of a VDOM reconciler (like React’s Fiber). The reconciler has to handle work priorities, time-slicing, tree traversal, and complex heuristics to determine if <div> A is the same as <div> B.

Signals don't care about the tree. They only care about the link between the data and the sink.

The Memory Trade-off

I'd be lying if I said Signals were a free lunch. Everything in engineering is a trade-off.

The Virtual DOM is CPU intensive but memory efficient (relatively speaking). It creates a lot of short-lived objects that garbage collectors are very good at cleaning up. It doesn't need to maintain a complex graph of long-lived subscriptions.

Signals are CPU efficient but memory intensive. To make fine-grained updates work, the framework has to maintain a reactive graph in memory. Every signal needs to know its subscribers, and every subscriber needs to know its dependencies. If you aren't careful with cleanups, you can end up with "zombie" subscriptions that leak memory.

However, in the context of modern web apps, we have reached a point where CPU cycles (especially on mobile devices) are much more precious than a few extra kilobytes of RAM used for a subscription graph. Reducing the amount of JavaScript execution required to update a label is a massive win for battery life and responsiveness.

Why "Native" Signals Matter

You might be thinking, "Vue has had reactivity forever, and Svelte doesn't even use a VDOM. What's new?"

What’s new is the movement toward standardization. We are currently seeing a proposal for native Signals in JavaScript.

For years, every framework had its own flavor of reactivity. If you wrote a library for Vue, it wouldn't work in Solid. If you wrote a state management tool for React, it was useless in Svelte. Native Signals promise a common language for reactivity.

Imagine a future where the browser understands signals natively. We could have:

1. Direct DOM-to-Signal binding: No framework code needed to update an input value.

2. Interoperable State: A signal created in a Preact component could be passed into a Lit element or a vanilla JS module without any "glue" code.

3. Optimized DevTools: Browsers could visualize the reactive graph of your application natively.

The Virtual DOM was a "Userland" solution to a missing platform feature. Now that the platform is catching up, the abstraction is becoming redundant.

The Compiler as the Great Equalizer

We can't talk about the death of the VDOM without mentioning the role of the compiler. Svelte was the first to really push the "No VDOM" narrative into the mainstream. It proved that you could take a declarative component and compile it into raw, imperative DOM commands.

// Svelte compiled output (simplified)

function update(changed, ctx) {

if (changed.count) {

set_data(h1, ctx.count);

}

}This is fundamentally the same goal as Signals: avoiding the diff. Svelte does it at compile-time, while Signal-based frameworks (Solid, Preact) do it at runtime.

The trend is clear: We are moving away from frameworks that try to "guess" what changed by comparing snapshots, and moving toward frameworks that "know" what changed because the data told them.

Is React "Legacy" Now?

This is the spicy question. Is the most popular UI library in the world now legacy technology?

In terms of architectural patterns, yes. The VDOM-based, top-down re-render model is objectively less efficient than fine-grained reactivity. React's own team knows this, which is why they are building React Forget—a compiler designed to automatically inject memoization into your code.

But React Forget is essentially an attempt to make the VDOM behave like a Signal-based system without changing the API. It's trying to hide the "diffing tax" by automating the workarounds we’ve been writing manually for years.

The reality is that React has a massive ecosystem and a "good enough" performance profile for 90% of use cases. It won't disappear tomorrow. But for new projects where performance, bundle size, and developer experience are the priorities, the "default" choice is shifting.

When you use a framework like SolidJS, you realize how much of your "React knowledge" was actually just "React troubleshooting." You don't need to know about useCallback because the function only runs once. You don't need useRef to persist values because variables don't get reset by re-renders.

Practical Example: The Heavy Table

Let's look at a real-world scenario: A table with 1,000 rows, where each row has a "status" indicator that updates every second via a WebSocket.

The VDOM Approach:

Each second, the state updates. The Table component re-renders. It creates 1,000 Row objects. The reconciler compares 1,000 virtual rows against the previous 1,000. It finds that only 5 rows had a status change. It updates those 5 DOM nodes.

*The cost: Creating 1,000 objects and performing 1,000 comparisons every second.*

The Signal Approach:

The WebSocket updates 5 specific Signals. Those 5 Signals have direct references to 5 specific <span> elements in the DOM. Those 5 elements update their text.

*The cost: 5 direct function calls and 5 DOM property assignments. The other 995 rows are never even looked at.*

The performance gap here isn't a few percentage points; it's orders of magnitude.

Moving Forward: How to Adapt

If you are a developer today, how should you view this shift?

1. Don't fear the VDOM, but stop worshipping it. It was a brilliant solution for its time. It made UI development predictable. But don't mistake "predictable" for "optimal."

2. Experiment with fine-grained frameworks. Spend a weekend with SolidJS or Svelte. Pay attention to how it feels to *not* have a dependency array. Notice how the code feels more like standard JavaScript and less like a framework-specific DSL.

3. Watch the TC39 Signals proposal. This is the most exciting thing happening in the JavaScript language right now. If it passes, it will change the landscape more than Promises or Async/Await did.

4. Evaluate your bottlenecks. If your app is slow, don't just throw React.memo at it. Understand *why* it's slow. Is it the diffing? Is it the component execution? Knowing the difference between the VDOM and Signals will help you make better architectural choices.

Final Thoughts

We are witnessing the "unbundling" of the framework. We used to need a massive, monolithic library to handle everything from state management to DOM diffing. Now, we are seeing those responsibilities shift.

Compilers (like Svelte) are handling the structural transformation.

Signals are handling the state propagation.

The Browser is (hopefully) going to handle the reactive primitives.

The Virtual DOM was the "Flash" of the 2010s—a necessary, proprietary-feeling abstraction that filled a hole in the web platform. As the platform matures, that abstraction is being absorbed into the foundation. We are finally getting back to the "Real" DOM, but this time, we have the tools to do it right.

The era of heavy diffing is ending. The era of the reactive graph has begun. And honestly? Your CPU—and your users—will thank you for it.