Zero-Downtime Database Migrations: The 'Expand and Contract' Pattern I Use for Every Release

A guide to evolving your database schema safely using the parallel-change pattern to avoid application outages during deployments.

The quickest way to ruin a Friday afternoon is to deploy a database migration that deletes a column while your application code is still trying to read from it. In a world of distributed systems and rolling deployments, "the code" and "the database" are rarely in perfect sync, and assuming they are is a recipe for 500 errors.

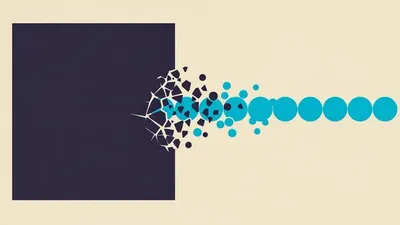

To avoid this, I use the Expand and Contract pattern (also known as Parallel Change). It’s a method for evolving your schema by breaking one big, destructive change into several small, backward-compatible steps.

The Problem: The "Big Bang" Migration

If you're renaming user_name to display_name, your instinct might be to do this in one PR:

- Run

ALTER TABLE users RENAME COLUMN user_name TO display_name; - Update the code to use

user.display_name.

The issue? During a rolling deploy, some server instances will have the new code (looking for display_name) while others still have the old code (looking for user_name). One of them _will_ crash.

The Five-Step Solution

Let’s walk through a real-world scenario: splitting a full_name column into first_name and last_name.

Phase 1: Expand (Add the new columns)

First, we add the new columns. At this stage, we don't touch the existing data or code. This is a purely additive, non-breaking change.

-- Migration 1

ALTER TABLE users

ADD COLUMN first_name TEXT,

ADD COLUMN last_name TEXT;Phase 2: Dual Writing

Now, we update our application logic. We want to start populating the new columns, but we keep the old column as the "source of truth." Every time a user is created or updated, we write to both.

// In your service layer

async function updateUser(user, newName) {

const [first, last] = newName.split(' ')

return db.user.update({

where: { id: user.id },

data: {

full_name: newName, // Still writing to the old source

first_name: first, // Now writing to the new source too

last_name: last,

},

})

}Phase 3: The Backfill

Existing records still have null values for first_name and last_name. We need to migrate that data.

Pro tip: For large tables, don't do this in one giant transaction. You'll lock the table and cause the very outage we're trying to avoid. Use a script to process records in batches.

-- Simple version for small tables

UPDATE users

SET

first_name = split_part(full_name, ' ', 1),

last_name = split_part(full_name, ' ', 2)

WHERE first_name IS NULL;Phase 4: Switch the Read Path

Now that the new columns are fully populated and kept up-to-date by Phase 2, we update the application to read from first_name and last_name. We can also stop writing to full_name.

At this point, full_name is "dead weight"—the code no longer cares it exists.

Phase 5: Contract (Remove the old column)

Once you're confident everything is working (usually after a few days in production), you perform the final cleanup.

-- Migration 2

ALTER TABLE users DROP COLUMN full_name;Why this is worth the effort

It feels like more work. It _is_ more work. You're doing two or three deployments instead of one. But here’s why I swear by it:

- Zero Downtime: Your users never see a "Something went wrong" page.

- Easy Rollbacks: If Phase 2 introduces a bug, you can roll back the code immediately. Since the

full_namecolumn is still there and being updated, no data is lost. - No Stress: You aren't staring at a monitoring dashboard with your heart in your throat, hoping the migration finishes before the new containers start.

The "Gotcha": Database Locks

While adding columns is usually fast in modern databases like PostgreSQL (it's a metadata-only change if there's no default value), dropping or altering columns can sometimes trigger an Access Exclusive lock.

Always check if your migration tool supports "concurrent" operations or if your specific database engine requires specific flags to avoid locking the entire table during the "Expand" or "Contract" phases.

Summary

The Expand and Contract pattern turns high-stakes database surgery into a series of boring, safe increments.

- Expand: Add the new structure.

- Dual Write: Support both old and new in code.

- Backfill: Migrate the old data.

- Read: Switch code to use the new structure.

- Contract: Remove the old structure.

It’s the most professional way to handle stateful changes. Give it a shot on your next release; your future self (and your users) will thank you.